Searching for the best AI video generator API to generate videos fast, at scale, and without breaking the bank?

At Shotstack, we build video infrastructure that powers modern applications and video automation for businesses. Our users rely on AI-generated assets and videos to keep up with the demand for digital media in 2025. These tools are incredibly useful for anyone looking to integrate video creation into their apps, automate personalized marketing at scale, or generate creative content quickly.

To help the community navigate this space, we tested and evaluated the top AI video generation APIs available today.

Here’s the TL;DR and a table summarizing our findings:

- Best API for AI video editing automation: Shotstack

- Best for corporate videos: Synthesia

- Best for marketing & social videos: HeyGen

- Best for personalized, interactive avatars: D-ID

- Best for interactive training & E-learning: Colossyan

- Best for creative and artistic production: Runway

- Best for API-driven creative content: Kling

Note: This guide only includes AI video generators that offer accessible public APIs for developers, not just web apps.

AI video generation API comparison table (2025)

Here’s a high-level look at the top contenders in the AI video generation API arena.

| Platform | Core Features | Video Realism | Customization | Primary Use Cases | API & Integration | Pricing Model |

|---|---|---|---|---|---|---|

| Synthesia | Text-to-video with 230+ AI avatars; 140+ language AI voiceover; video templates. | Highly realistic presenter avatars for polished corporate videos. | Template-based; brand assets; custom “digital twin” avatars. | Enterprise training, marketing, internal communications. | API on higher-tier plans for automation; SOC 2 compliant. | Subscription (Starts ~$29/mo). |

| HeyGen | Text/image-to-video with 500+ AI avatars; photo/video avatar cloning; AI translation. | Ultra-realistic human avatars with expressive lip-sync. | Extensive; stock/custom avatars, control over expressions & outfits. | Marketing, product demos, multilingual content localization. | Robust API for generation & translation; Zapier integration. | Freemium (API from $99/mo). |

| D-ID | Talking head video from any image + script; real-time streaming avatar API. | Photorealistic faces from photos with natural lip-sync. | Moderate; upload any portrait as an avatar, choose from TTS voices. | Personalized outreach, AI customer service agents, e-learning. | Developer-focused REST API; low-latency streaming mode. | Subscription (API plans from $18/mo). |

| Colossyan | Script-to-video with AI actors; 600+ AI voices; interactive video features (quizzes). | Realistic presenter avatars, supports two-way dialogues. | High; embed media, create custom avatars from your own footage. | Training & education, scenario-based learning, onboarding. | Full API for automating personalized training videos. | Freemium (Starts free, paid from $19/mo). |

| Runway | Generative video from text/image/video prompts; advanced motion brush tools. | AI-generated scenes (surreal to cinematic); character consistency. | Moderate; use reference images/videos for style; AI “directing” tools. | Creative content, short films, music videos, VFX ideation. | API for programmatic access to generative models. | Credit-based (Starts free, paid from $15/mo). |

| Kling | Text-to-video with multiple visual styles; virtual try-on API for fashion. | High-quality AI scenes (cinematic to anime). | Guide scenes with start/end frames; motion brush tool. | Creative content, advertising, virtual clothing try-ons. | Full developer API platform for business integration. | Prepaid Resource Packages. |

| Shotstack | Cloud video editing API unifying generative AI and NLE editing on a timeline. | Asset-agnostic; depends on inputs (real footage, AI assets, etc.). | Extremely high; full programmatic control over layout, media, effects. | Automated video workflows, personalized marketing, data-driven videos. | Pure dev-focused API service; SDKs, webhooks, Zapier/Make support. | Pay-as-you-go (From $0.20/rendered minute). |

Testing Methodology

To ensure a fair and consistent evaluation of each AI video generation API, we applied the following testing methodology:

- Setup & Access: Registered with each platform to access public APIs or sandbox environments. For platforms with gated or enterprise-level access (e.g., Sora, Google Veo 3.1), we reviewed available documentation and tested where access was granted.

- Prompt Consistency: Used the same core prompts across platforms to compare output quality and realism — apples to apples, not oranges.

- Core Feature Testing:

- Input flexibility (text, image, audio)

- Output quality (resolution, realism, audio sync)

- Customization options (avatar control, layout, scene design)

- Speed and reliability

- Documentation and developer experience

1. Shotstack: Best API for AI video editing automation

Alright, we know what you’re thinking. But hear us out, and let us explain why Shotstack is one of the best APIs for developers looking for AI video generation platforms.

Shotstack is not just an AI video generator; it’s a video editing automation platform that combines different functionalities into one powerful API. It’s an assembly line in the cloud, letting you combine various AI-generated assets (images, voiceovers, avatars, etc) and edit them together programmatically on a timeline.

With Shotstack, a developer can do things like: “Generate an image with this prompt, generate a voiceover of this script, take a given avatar video clip, and composite them together with background music and a title card, then render it all as a final video”, all through one API call or workflow. Without Shotstack, you might have to call separate APIs (one for image generation, one for TTS, and one for video editing) and then manually stitch the results using convoluted FFmpeg scripts.

Key Features

- Timeline-based editing: Use simple JSON to describe video edits, including layers, scenes, transitions, and effects.

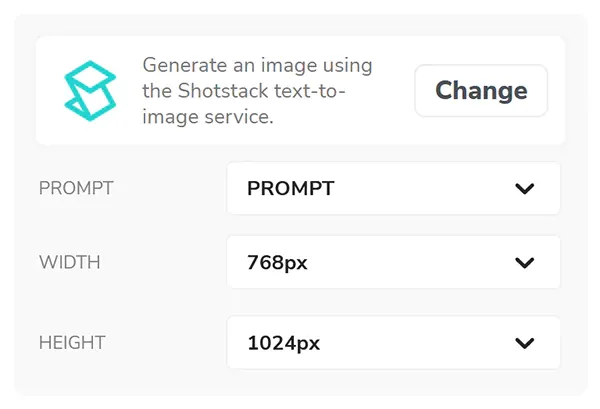

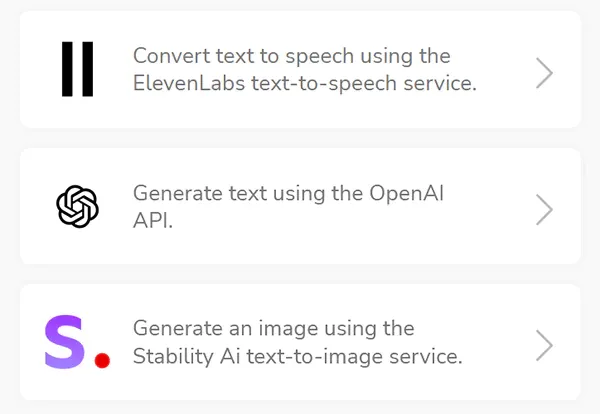

- Integrated AI asset generation services and models: The AI video generator API includes endpoints for common AI tasks using the best open-source text-to-speech and text-to-image models, allowing these assets to be created and placed onto the timeline in a single workflow.

- Asset agnostic: It is designed to work with any media, including video clips generated from the other APIs on this list. You can take a D-ID avatar, a Runway background, and your own brand assets and composite them together.

- Full customization: Programmatically control every aspect of the final video, from layout and text overlays to merging third-party avatar clips.

- Scalable automation: Built for mass production, Shotstack can render hundreds of thousands of personalized videos concurrently.

Strengths

Shotstack’s strength is its unmatched flexibility, scalability, and the fact that it’s asset-agnostic. It’s the perfect solution for building custom video applications, automating personalized marketing campaigns, or creating data-driven videos (e.g., real estate listings, sports highlights). Its usage-based pricing is extremely cost-effective at scale, the infrastructure is battle-tested, and the developer experience is top-notch, with excellent documentation and open-source white-label options.

It’s worth noting how Shotstack can integrate with every single platform we will discuss later on:

It can take a Synthesia or D-ID output and post-process it (e.g., adding an intro/outro, adding background music, combining multiple avatar clips into one video).

It can use HeyGen’s API to produce an avatar speaking Spanish, and then use its own video editing API to overlay translated on-screen text and your company logo.

It can feed output to or from other APIs as part of a workflow, acting as the central hub where everything comes together.

Companies often use Shotstack as the assembly stage in a larger automated content pipeline.

Limitations

The main limitation is that it’s a mostly developer-centric tool. It requires some technical skill to use the API and is not a simple “enter text, get video” solution for end-users. It’s designed for those who are building those solutions. That said, with AI code generation widely available now, creating JSON templates and simple automation scripts becomes a matter of copying and pasting some code. Shotstack also offers an online bulk video editor for marketing teams and no-code users.

Pricing & Integrations

Shotstack offers a free developer sandbox for unlimited testing. The pay-as-you-go pricing model is simple and transparent. The platform integrates seamlessly with automation tools like Zapier and Make and can orchestrate content from any other AI API into a thousand different on-brand variations.

👉 Unlimited developer sandbox for testing. Start for free today.

2. Synthesia

Synthesia is a powerhouse in the AI video space, best known for its ability to turn scripts into professional videos featuring lifelike talking avatars. It’s an easy-to-use tool for creating polished, studio-quality content without ever touching a camera.

Key Features

- Massive avatar library: Choose from over 230 diverse AI avatars or create a custom “digital twin” of yourself.

- Multi-language support: Generate voiceovers in over 140 languages and accents.

- Corporate templates: Access a wide range of templates designed for training modules, how-to guides, and company updates.

Strengths

Synthesia’s biggest strength is its realism and polish. The avatars, based on real actors, deliver incredibly natural speech with accurate lip-syncing, making them ideal for corporate content. The platform is enterprise-ready, with features like team collaboration and SOC 2 Type II compliance.

Limitations

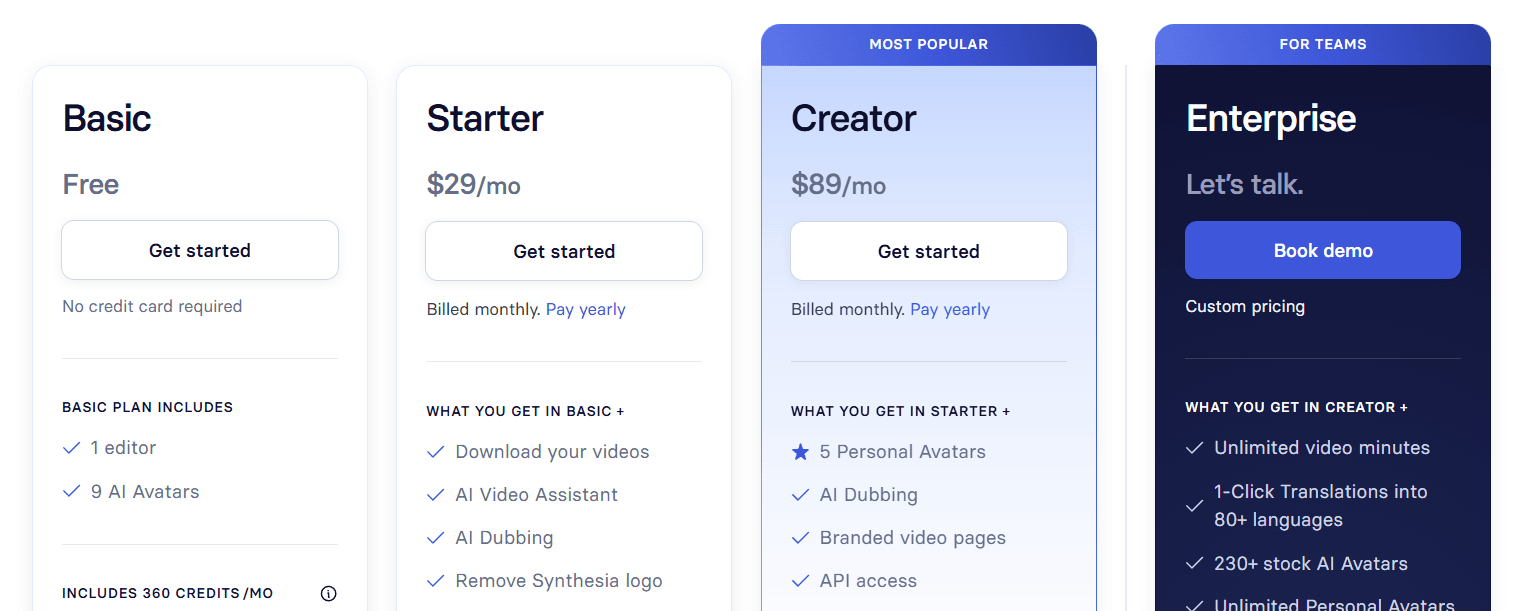

It’s primarily a talking-head video generator. You can’t animate avatars to perform complex actions; they are designed to be presenters. API access is also restricted to higher-tier plans, and the cost per minute can be higher than other solutions.

Pricing & Integrations

Synthesia’s platform is mainly a web studio, but its API allows businesses to automate video creation. The Starter plan starts at $29/month for 10 minutes of video, and the API is only available on the Creator plan and above.

2. HeyGen

HeyGen pushes the boundaries of avatar realism and customization, offering a suite of powerful features that make it a versatile choice for marketers, trainers, and content creators.

Key Features

- Hyper-realistic avatars: Create videos with 500+ stock avatars or clone your own from a photo or video.

- AI Video Translator: Upload a video, and HeyGen translates the speech into 175+ languages while cloning the original voice and matching lip movements.

- Interactive Avatars: Build video chatbots that can engage in real-time conversations with users.

Strengths

HeyGen’s standout feature is its ultra-realistic avatars and flexibility. The ability to change an avatar’s outfit, gestures, and expressions provides a level of control that few competitors offer. Its AI video translator is particularly helpful for content localization, and a well-maintained API supports avatar generation and translation at scale.

Limitations

Pricing can add up for longer or high-volume videos beyond the base tier. Avatar style is presenter-based only, which means no full-body movement or scene interactivity. Their limited video editing capability relies on templates and scene layouts, and the video avatar creation API is limited to the enterprise plan only.

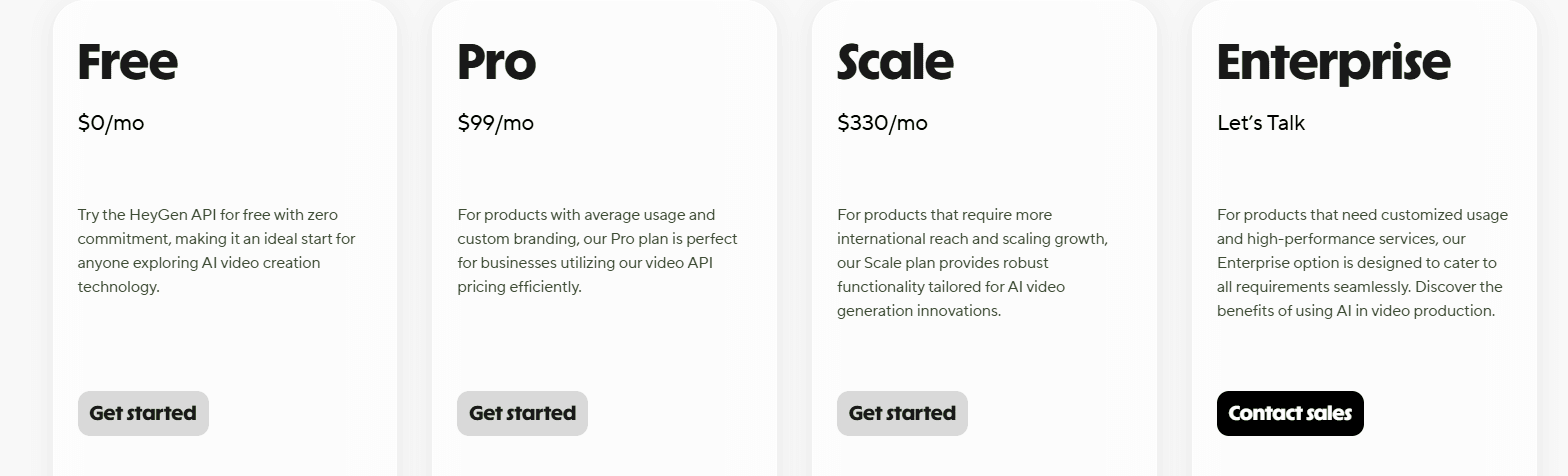

Pricing & Integration

HeyGen offers a basic free plan to get started. Paid plans for the video API begin at $99/month for the Pro tier, offering 100 minutes of generated avatar video. The platform integrates with popular tools, such as Zapier, Canva, and even ChatGPT.

3. D-ID

D-ID is a pioneer in AI-driven video, specializing in its “Creative Reality” technology that animates any still photo into a talking video. If you want to bring a portrait, whether it’s a selfie, a historical figure, or an AI-generated face, to life, D-ID is the tool for the job.

Key Features

- Animate any face: Upload any portrait image and a script to generate a talking head video.

- Audio-driven animation: Upload your own audio track, and D-ID will perfectly sync the avatar’s lip movements.

- Real-time streaming API: A feature that allows for the creation of interactive video agents and live customer support avatars, perfect for integrating with conversational AI models.

Strengths

D-ID is fast, affordable, and accessible. With API plans starting at just $18/month, it has a very low barrier to entry. Its core strength is its simplicity and the unlimited creative freedom it offers in avatar appearance. The real-time streaming API makes it a top choice for building interactive applications.

Limitations

The main limitation is that D-ID is specialized. It only produces talking-head videos on a static background. If you need multi-scene videos with graphics or transitions, you’ll need to export the D-ID clip and use another editor. For instance, you could use Shotstack API to composite D-ID’s talking head onto other footage or slides. Shotstack offers native integration with D-ID’s avatar API.

Using the Shotstack AI Video Generator API, the following payload can be used to generate a video file:

{

"provider": "d-id",

"options": {

"type": "text-to-avatar",

"avatar": "jack",

"text": "Hi, I'm Jack and I'm a talking avatar generated by D-ID using the Shotstack Create API",

"background": "#000000"

}

}

This will generate an MP4 video file using the Jack avatar and the text provided. The background has been set to #000000 and is optional. For the full list of avatars, refer to the D-ID options in the API reference documentation.

Pricing & Integration

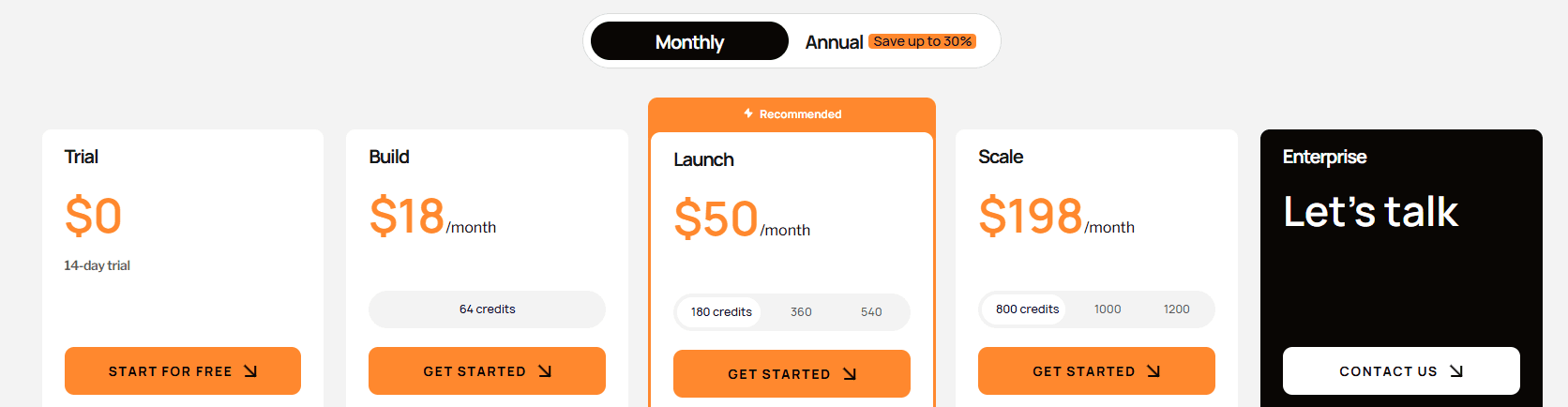

D-ID is built for developers. Its straightforward REST API makes it easy to integrate. You send a POST request with an image and text/audio, and you get a video URL back. There is a free trial period of 14 days, after which you can pick one of the four plans according to your needs.

4. Colossyan

Colossyan Creator is an AI video generator built specifically for training and education. It excels at turning scripts into engaging, interactive learning experiences with AI presenters.

Key Features

- Interactive video: Embed clickable quizzes, branching scenarios, and buttons directly into your videos to engage learners.

- Conversational scenes: Create dialogues between two AI avatars to simulate real-world interactions, like a customer service role-play.

- GPT script assistant: Generate training video scripts directly within the platform.

- Massive voice library: Choose from over 600 AI voices in 70+ languages.

Strengths & Limitations

For L&D departments, Colossyan is a massive time-saver. Its focus on interactivity sets it apart, making training more effective. The ability to create custom avatars from your own footage via their API is another powerful feature for personalizing content.

Because of its specialization, Colossyan isn’t geared toward creative marketing or social media content. The interactive features are also tied to its own player, so they won’t work if you export the video as a standard MP4 file.

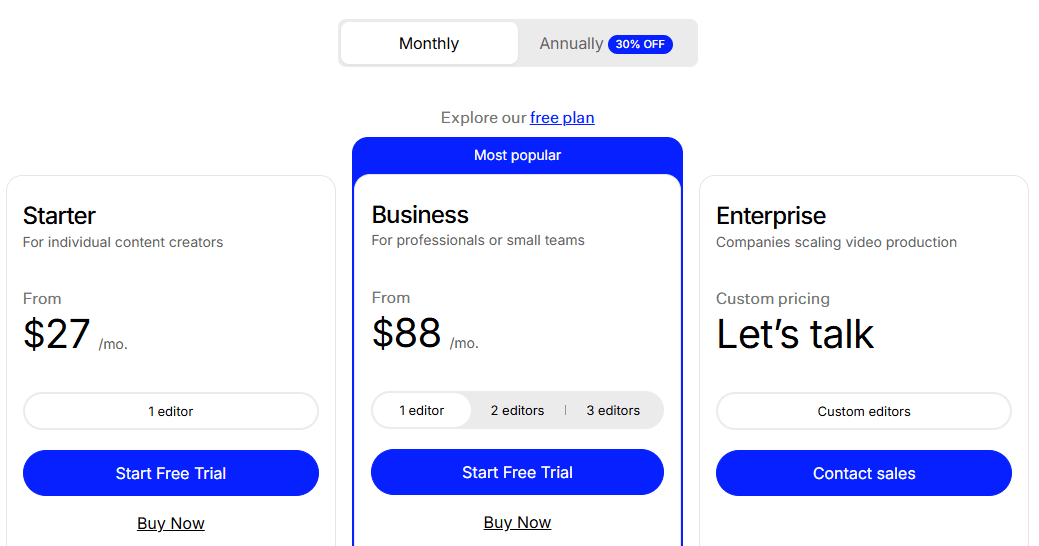

Pricing & Integration

Colossyan offers a free plan for testing, with the Starter plan at $27/month for 15 minutes of video, but it doesn’t include API access. The Business plan at $70/month offers unlimited video generation, making it highly cost-effective for teams producing lots of content. Its API allows for deep integration with any LMS or HR system to automate training video creation.

5. Runway

Runway ML is on the cutting edge of creative generative video. Instead of using avatars, Runway’s models (Gen-1, Gen-2, and now Gen-4) generate original video footage from text prompts, images, or even other videos.

Key Features

- Text-to-video: Describe a scene in text (e.g., “a futuristic cityscape at sunset, cinematic style”) and watch the AI bring it to life.

- Video-to-video: Apply a completely new style to an existing video clip.

- Advanced director tools: Use a “motion brush” to direct the movement of specific objects and maintain character consistency across multiple shots.

Strengths

Runway is one of the leading platforms for visual experimentation and creativity. It’s perfect for artists, filmmakers, and designers looking to prototype ideas or create unique visuals for social media and short films. The platform is constantly evolving, with each new model pushing the boundaries of what’s possible.

Limitations

Generative video is still an emerging technology. Clips are often short (a few seconds), and the quality can be inconsistent. It’s not the right tool for creating videos that require a specific, clear narrative delivered by a presenter.

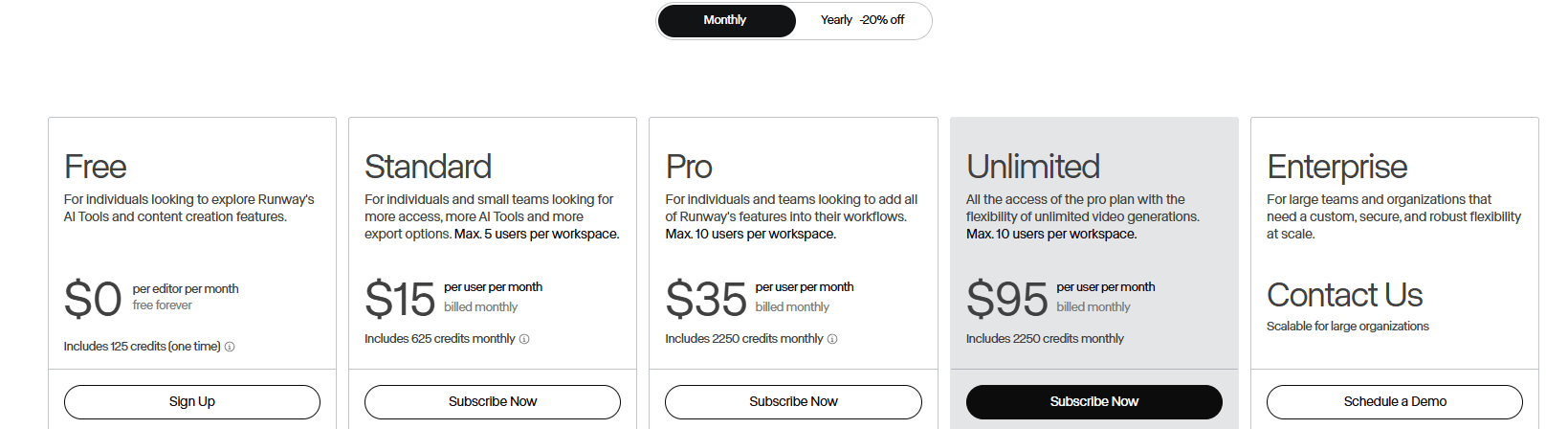

Pricing & Integration

Runway uses a credit-based system, with a free tier to get you started. Paid plans start at $15/month. For the API, you pay as you go, with $0.01 per credit. Its video generation models are also integrated into other tools like Canva’s Magic Studio.

6. Kling

Developed by Kuaishou, Kling is a powerful text-to-video generator making waves with its high-quality output, style flexibility, and developer-first approach.

Key Features

- Multi-style generation: Generate video from the same prompt in different styles, like hyper-realistic, anime, or sketch.

- High-quality output: Supports up to 1080p resolution at 30 fps, with clip durations up to 30 seconds.

- Virtual try-on API: A specialized feature for fashion retail that generates videos of models wearing specific articles of clothing.

Strengths

Kling’s biggest advantages are its aggressive pricing and its API-first design. The consumer plans are significantly cheaper than competitors, and its enterprise API tiers are built for businesses that need to generate video at scale. The quality is impressive, often producing cinematic and photorealistic results.

Limitations

While Kling is impressive, it shares the general shortcomings of AI video: clips are short, sometimes you get weird glitches, and you wouldn’t use it for precise tasks like an avatar delivering exact lines.

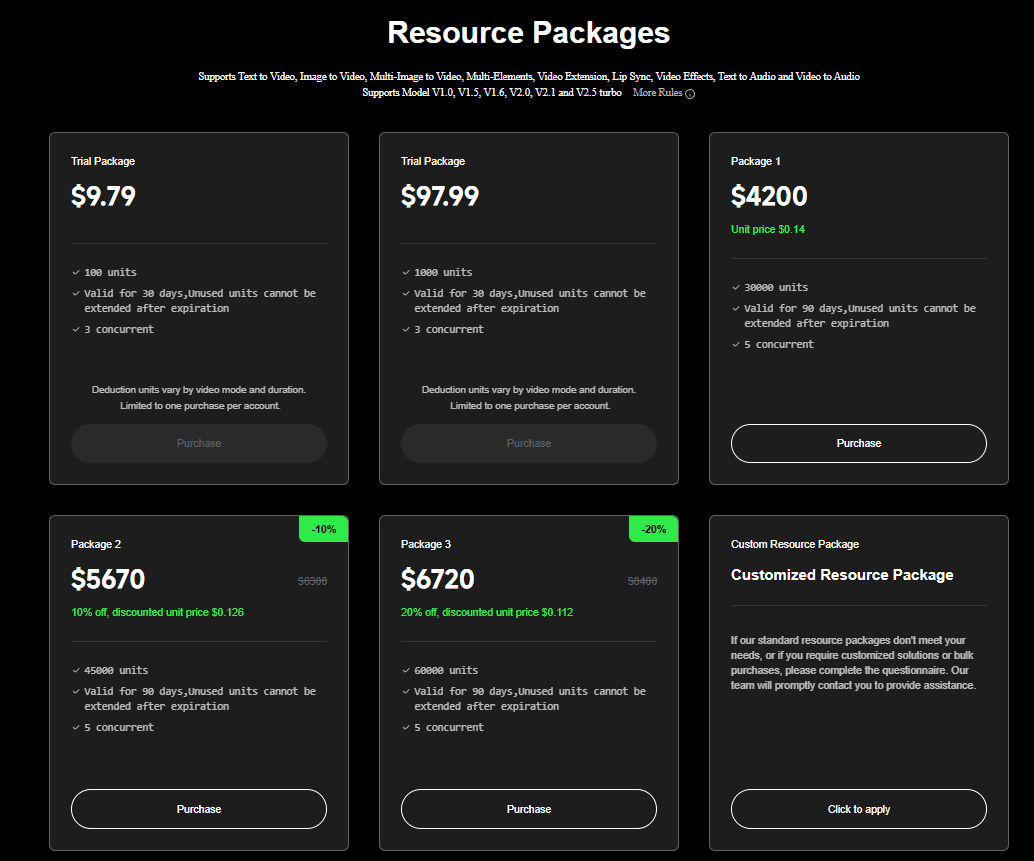

Pricing & Integration

Kling offers a freemium model for its web app. For developers, the platform was built with integration in mind, offering a full API to embed video generation into other applications. The API operates exclusively on a prepaid resource package model, where users purchase bundles of credits for specific capabilities like video generation, image generation, or virtual try-on.

Special Mention: Sora & Veo 3.1

No conversation about AI video would be complete without mentioning the two models that have captured the public’s imagination the most: OpenAI’s Sora and Google’s Veo 3.1. These platforms have demonstrated remarkable capabilities, producing long-form, high-fidelity video with a deep understanding of physics and narrative.

So, why aren’t they in our main comparison?

It comes down to our core criteria: accessible public APIs for developers.

As of late 2025, these state-of-the-art models either lack a self-serve API or are in a limited, premium-access preview, placing them in a different category from the other tools on our list.

- OpenAI’s Sora 2: This model is renowned for its unmatched realism and ability to generate clips up to a minute long while maintaining character consistency. Its key strength is its “world simulation” capability, which allows it to respect physics and follow complex, descriptive prompts. It also generates synchronized audio, making it a one-stop solution for a complete audiovisual scene. However, access is currently restricted to a mobile app and select partners, with no public API available for general developer integration.

- Google’s Veo 3.1: Google’s flagship model produces cinematic, high-resolution (up to 1080p) clips with impressive realism and native audio generation. While it has a shorter base duration of 8 seconds, it offers an “extension” feature to create longer sequences. Its major advantage is its developer-focused API, available through Google Cloud (Vertex AI) and the Gemini API, which offers fine-grained control via reference images and video masking. However, it remains in a paid preview and comes at a premium price: $0.40 per second for standard quality and $0.15 per second for the faster model.

When these state-of-the-art models eventually become available, they will undoubtedly revolutionize content creation. However, they will likely remain specialized generation engines. The need to take their raw output, edit it, add brand assets, composite it with other media, and integrate it into a scalable workflow will be more critical than ever.

Which AI Video Generator API Is Best for You?

The AI video generator landscape of 2025 is incredibly diverse. The “best” API truly depends on your specific goal.

- For corporate training & presentations: Synthesia and Colossyan are top choices for their polished avatars and learning-focused features.

- For marketing & personalization: HeyGen offers fantastic customization and translation, while D-ID is great for quick, personalized outreach.

- For creative content & art: Runway and Kling lead the pack with their powerful generative models that can create visuals from scratch.

- For automated video editing & custom applications: Shotstack provides the ultimate flexibility and scalability for developers who need to build custom, data-driven video solutions.

We empower developers to build the next generation of video experiences by combining the best of AI generation with the precision of programmatic video editing and automated workflows. Get your free API key to explore the Shotstack API.

Frequently asked questions (FAQs)

When should I choose an AI avatar generator versus a generative video tool?

You should choose an AI avatar generator (like Synthesia, HeyGen, or D-ID) when your primary goal is to deliver a specific, scripted message clearly. These tools are perfect for training modules, corporate announcements, and explainer videos where a human-like presenter is needed to build trust and convey information directly.

In contrast, you should use a generative video tool (like Runway or Kling) when your goal is creative expression or generating unique visual content. These are ideal for creating artistic short films, abstract background visuals for websites, music videos, or ad concepts where the imagery itself is the main focus, rather than a narrated script.

Several platforms mention creating a custom ‘digital twin’ avatar. What does this process typically involve?

Creating a custom avatar, or “digital twin,” usually involves a more hands-on process than using stock avatars. Typically, you will need to record several minutes of high-quality video footage of the person, often following specific instructions like reading a script, looking directly at the camera, and using various facial expressions. This footage is then uploaded to the platform’s service, where their AI models process it over a period of hours or days to create the animatable avatar. This is often an enterprise-level feature and may come with an additional setup cost.

What are the key legal and ethical considerations when using AI video, especially with custom faces or voices?

This is a critical area. The three main considerations are:

- Consent and likeness rights: You must have explicit, written consent from any individual before creating a digital avatar of them or cloning their voice. Using someone’s image or voice without permission can lead to serious legal issues.

- Copyright ownership: Generally, the user who creates the video owns the final output, according to the terms of service of most platforms. However, you should always review the specific terms for commercial usage rights.

- Misinformation: Reputable platforms have strict policies against creating misleading or harmful content, such as impersonating public figures to spread disinformation. Violating these terms can result in an immediate ban.

Can I combine outputs from different AI video APIs? For example, use a D-ID avatar with a Runway background?

Yes, absolutely. This is a common advanced workflow. You could manually generate a talking head video from D-ID, create a cinematic background with Runway, and then use traditional video editing software to combine them. However, for an automated and scalable solution, a platform like Shotstack is ideal. You could use its API to programmatically pull the avatar clip from D-ID, generate a background, and composite them together with text overlays and audio—all in a single, automated workflow.

How much control do I have over the final video output in these platforms?

The level of control varies significantly.

- Low to Moderate Control (Turnkey solutions): Platforms like Synthesia offer high-quality but templated outputs. You control the script, avatar, and basic branding, but not the detailed scene composition or animation.

- Moderate Control (Creative tools): Runway and Kling give you more creative direction through detailed text prompts, reference images, and “motion brush” tools, but the AI’s final interpretation still involves an element of unpredictability.

- Total Control (Editing APIs): A platform like Shotstack gives you complete, granular control. Because you are defining a video timeline in code (JSON), you can specify the exact size, position, timing, and effects for every single asset in your video.

The pricing models vary a lot (per minute, credits, subscription). Which model is best for my project?

Choosing the right pricing model depends on your usage pattern:

- A monthly subscription (like Synthesia or Colossyan’s unlimited plan) is best for predictable, consistent needs, like a corporate team producing a set number of training videos each month.

- A credit-based system (like Runway or D-ID) is great for variable or experimental use, as you only pay for what you generate. It offers flexibility if your production volume changes month-to-month.

- A pay-as-you-go model based on rendered minutes (like Shotstack) is ideal for API-driven applications and scalable products. It ensures your costs align directly with user activity, which is perfect for startups building video features for their customers.

Get started with Shotstack's video editing API in two steps:

- Sign up for free to get your API key.

- Send an API request to create your video:

curl --request POST 'https://api.shotstack.io/v1/render' \ --header 'x-api-key: YOUR_API_KEY' \ --data-raw '{ "timeline": { "tracks": [ { "clips": [ { "asset": { "type": "video", "src": "https://shotstack-assets.s3.amazonaws.com/footage/beach-overhead.mp4" }, "start": 0, "length": "auto" } ] } ] }, "output": { "format": "mp4", "size": { "width": 1280, "height": 720 } } }'

Experience Shotstack for yourself.

- Seamless integration

- Dependable high-volume scaling

- Blazing fast rendering

- Save thousands

![Data driven personalization: Moving beyond “Hi [Name]” emails](https://d2jn8jtjz02j0j.cloudfront.net/Gemini_Generated_Image_wipy7bwipy7bwipy_19f610bcc6.png)